Just a few years ago, autonomous driving surged into prominence with a seemingly warlike urgency, as though our very survival depended on our cars’ ability to navigate a roundabout while their occupants scrolled through Twitter or made out in the back seat. In Silicon Valley, carmakers set up labs while Google and Uber hired engineers from universities around the world. Audi, Ford, BMW and Mercedes-Benz pivoted their tech-bragging from the car shows to CES, where they extolled the safety-enhancing virtues of self-driving cars. Insurance companies wrote their own obituaries, as driving yielded to mobility. Millennials shrugged. It was madness.

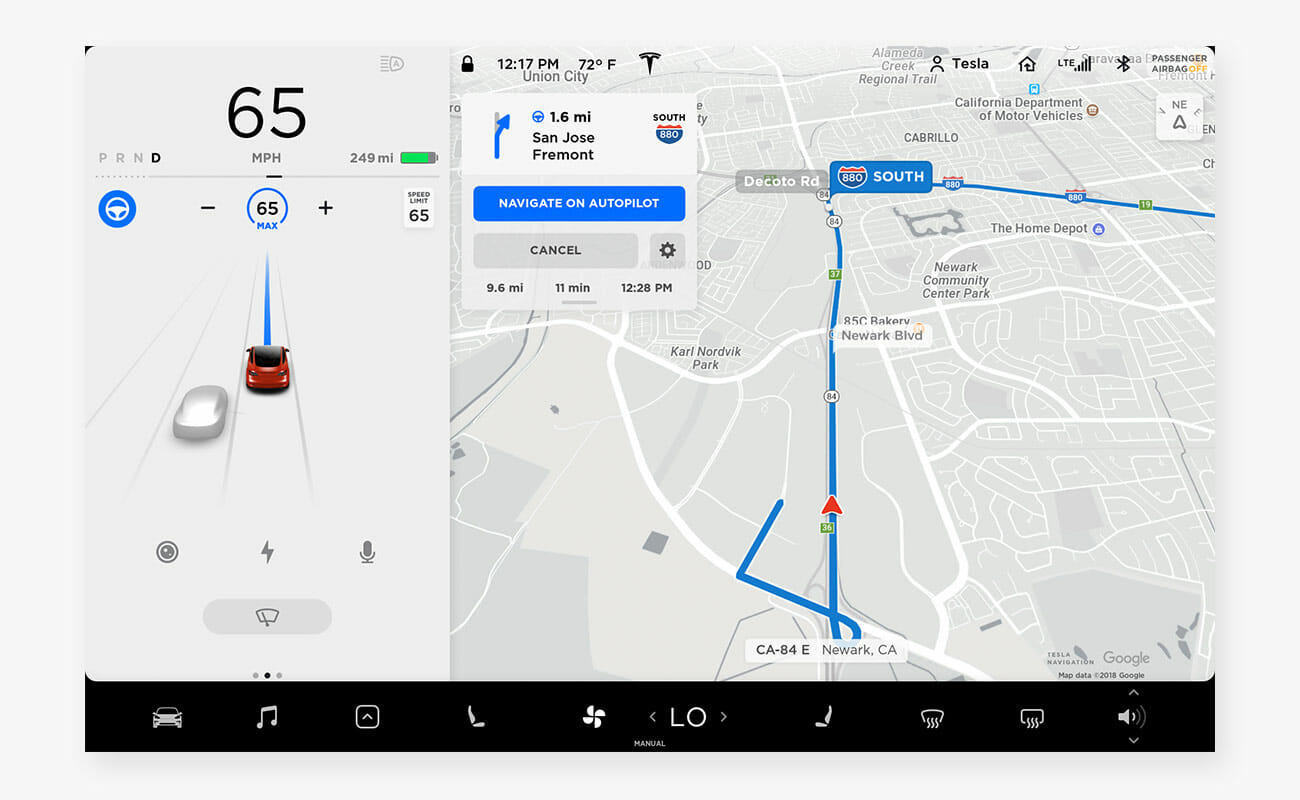

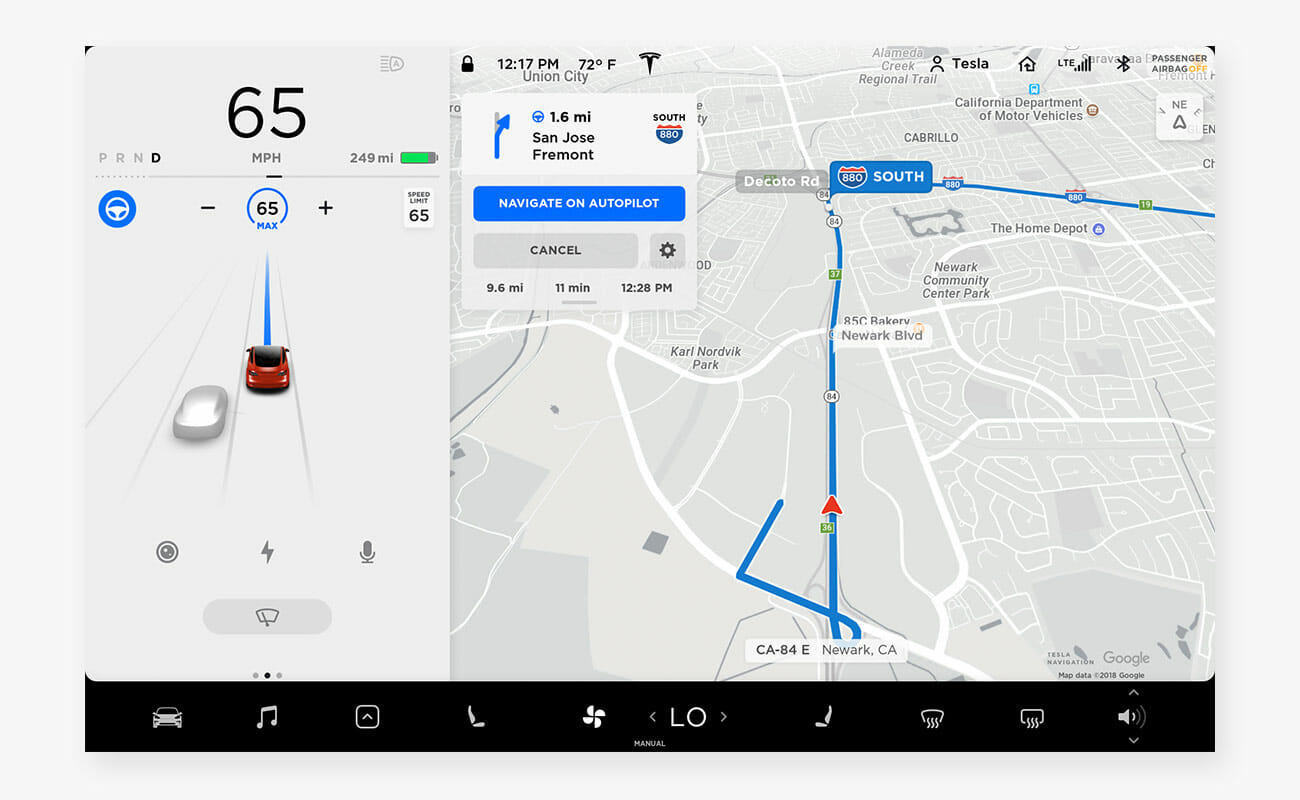

We have two things to thank for this: the baseline ability of modern computing to potentially take driving duties off the hands of humans, and Tesla. The former was inevitable; the latter, a pure force of nature. Tesla founder Elon Musk has been pushing hard for autonomy since the Model S sedan debuted in 2012. Its ever-improving autopilot system is now, arguably, the most advanced driver-assistance system on the roads, even if it’s still a far cry from true autonomy. It can drive itself down highways, pass cars, switch roads at interchanges, exit when needed and even park itself at your destination. The auto industry reacted to Tesla’s autonomy push much as it did to Tesla’s electric vehicle push: with all-out panic. Hence the labs and shows and bold proclamations of near-future capability. Mercedes-Benz, Cadillac and Audi, are in hot pursuit, as is Google’s Waymo, which is the de-facto master of surface-street navigation while Tesla dominates care-free flying down the highways.

But while there’s still forward momentum there is also hand-wringing over such truly critical details as whether autonomous cars should be allowed to mow down pedestrians in order to protect their passengers, and who will be liable when that happens. For the most part, the urgency has dialed down dramatically from its frantic peak a year or so ago. This second mind shift has to do with the dawning reality that autonomy is actually going to be pretty hard to get right. In fact, it may never happen at all.

What is Autonomous Driving?

First, though, a brief primer. Most of the systems that are engineered in the direction of autonomous driving are technically referred to as ADAS, or advanced driver assistance systems. These include the now commonly available features such as automatic adaptive cruise control, lane-keep assist, active emergency braking, pedestrian detection, signage detection and other systems. Cars can be equipped with multiple cameras and radar systems to process the environment around them and enable to the car to drive itself along with little trouble. Cars will eventually be widely equipped with laser-based LIDAR, which will significantly boost their ability to read environments on the fly.

Levels of Driving Automation

As these systems stack up and become knitted together, something resembling true autonomous driving will emerge: the Level 5 system, topmost on the widely accepted levels of driving automation scale. Right now we’re capable of Level 2, which means partial automation. (Level 1 consists of zero automation.) All current Level 2 systems on the road still require active driver participation. The operator of the vehicle must pay attention and be ready to take over at a moment’s notice, even if the car is technically handling the mechanics of movement and keeping an eye on things itself. This is as true for Tesla’s Autopilot as it is for Cadillac’s Super Cruise. It’s both a practical necessity, since the technology is still new and largely unproven, and a legal one: Regulations require humans at the controls of moving vehicles.

Level-three systems allow for vehicle control of most driving conditions, but the driver must still be vigilant and ready to take over. This is increasingly seen as highly problematic, though, given the unreliability of human drivers who are distracted by smartphones or generally unable to pay persistent attention to something that’s supposed to be handled by a machine. After the high-profile Uber crash in Arizona, in which a pedestrian was killed, this solidified the idea in many minds that the machine-to-person transition couldn’t be trusted.

As a result, carmakers are increasingly leaning toward leapfrogging over Level 3 entirely and going straight to Level 4, in which the car would manage most all driving duties with no human intervention, and no need for persistent human vigilance. There would still be constraints to when and where such vehicles could operate, most likely excluding challenging environments such as unpaved roads or whiteout conditions in blizzards. But this version would indeed allow you to doze on your daily commute, and it would vastly improve road safety, no question. You don’t even necessarily have to worry about losing your ability to drive your own car. While there may be times and roads that mandate full autonomy—mostly with an eye toward improving traffic flow—the Level 4 cars will be fully integrated with manually-driven cars, and indeed able to improve their safety as well, simply by reacting faster in order to avoid collisions with human drivers. So you collectors of vintage 911’s and Defenders and future-vintage STi’s and Boxsters will still be able to go out for some white-knuckle Sunday drivers. Just don’t be surprised if the other cars on the road steer clear.

Full Autonomy May Be Impossible

But what about Level 5, full autonomy? That’s even more problematic than Level 3, because it may indeed be impossible, a sentiment echoed in the fall by Waymo CEO John Krafcik. The reason is that true autonomy is something quite different from just really good ADAS. In its purest form, it will need to include such nebulous, human-like capabilities as judgment, conjecture and even wisdom. An autonomous car must be able to, say, discern the difference between fog and smoke from a distance, lest it propel you headlong into forest fire from which you may not emerge. It must possess the kind of sixth sense that human drivers possess, the one that tells them, from the slightest clues in a person’s behavior or movement, that the kid riding his bike down the street ahead of you probably doesn’t actually know you’re there, and is about to cut right in front of you. It must be able to look at a damaged, muddy road on a hillside during a storm, suspect it might give way under the car’s weight, and just say “nope.”

The Crucial Human Element

All that said, they must, ultimately, also obey the commands of their owners. It may be true that the muddy road will not support the weight of the vehicle, but it may also be true that the road behind is quickly being washed out by a flood. There may be no choice, and the car must try despite its best guess being to stop dead in its tracks. These, of course, are the so-called edge-cases: the far-out, highly unlikely scenarios that nevertheless happen all the time because there are countless variations of them. In reality, we se them every day while driving, but don’t give them a moment’s thought. We know that two guys unloading a fridge from a truck are about to tip it over in your direction, so we give it a wide berth. Even something as simple parking your car at a weird angle so you and your roommates can all fit in your driveway—your car has to be able to understand what you’re asking and do as instructed. With computers, all this stuff far easier said than done. But that’s what true autonomy will need.

Despite these profound challenges, however, there’s still a sporting chance that such capability will arrive. After all, the world is finite—we know all the roads, all the rules, and indeed most all of the edge cases—and computers think fast, regardless. With enough machine learning under their belts, and enough real-world practice reacting to all the little gotchas of driving, the machines will indeed become quite good, and absolutely better than mere humans. For the most part, they already are. The question is whether we’ll ever be truly able to turn over the reins completely.